Agency, AI Trends, The End of Recruiting and How People Are Really Using Gen Al

Questions are the new moat.

Good morning

I hope you are doing great. I offer my apologies for not sending the newsletter last week. We ran the first OpenAI hackathon in Poland, and then wrapped up the first edition of the AI_managers program. Both ended up a huge success. Nothing brings me more pleasure than helping others learn more and build.

I made sure that this week’s edition is worth the wait.

In today's edition, among other things:

Agency Is Eating the World

The 2025 AI Index Report

A History of Thinking on Paper

The End of Recruiting

How People Are Really Using Gen Al in 2025

AI Agent Pricing Framework

The Guide to Marketing Attribution

Onwards!

Agency Is Eating the World

Why the next dominant force in tech isn’t scale, but individual action. There was a time when building something meaningful required permission—capital, infrastructure, teams, media. Then software ate the world. Now we have the sequel: agency is eating the world.

We’re entering a new phase where leverage no longer comes from institutional size, but from individual ability to act—quickly, autonomously, and without asking for permission. Not just “move fast and break things,” but move alone and build things that compound.

This shift is powered by:

AI tools that collapse operational cost: One person can now write, code, design, and deploy at a level that previously required a team.

Distribution that rewards clarity over consensus: Twitter, Substack, and Farcaster reward speed and conviction. Institutions are still editing the memo.

Trust shifting from brands to people: Reputation is earned in public, in real-time. Not through logos, but through output.

What this means for founders and operators:

Don’t build for scale. Build for leverage. Ask: how much can one person do with the tools available today?

Speed is now a wedge. AI-native teams will ship faster, test cheaper, and adapt quicker than incumbents still stuck in process.

Institutions are breaking. Talent is exiting. Trust is decentralizing. And the best ideas will increasingly come from people who don’t need permission to execute.

The industrial era optimized for coordination. The software era optimized for distribution.

The agency era optimizes for execution.

The 2025 AI Index Report

Stanford’s 2025 AI Index dropped recently, and it’s a monster—nearly 500 pages of data, charts, and trendlines on everything AI: research, models, economics, safety, public opinion. It's the clearest picture yet of where AI actually is right now.

Key Insights:

Industry now dominates AI frontier research: 91% of major machine learning models released in 2023 were from industry, not academia.

AI matches or outperforms humans in key benchmarks:

ImageNet classification: 90.2% (better than human baseline)

Visual reasoning (VQAv2): 85.5% (human level)

Massive multitask language understanding (MMLU): 88.8% (above human avg.)

Training costs are reaching staggering levels:

OpenAI’s GPT-4 cost an estimated $78 million to train.

Google Gemini Ultra likely cost $191 million, up from under $10M just two years prior.

Model evaluations are more rigorous:

The number of benchmarks used to test LLMs increased by 50% year-over-year.

Safety and robustness metrics are beginning to standardize, but progress remains slow.

AI use in scientific research is exploding:

The number of papers citing LLMs like ChatGPT in medicine, biology, and chemistry grew 11x in one year.

Public opinion is polarizing:

52% of Americans now express more concern than excitement about AI, up from 37% in 2022.

So that’s some data points but this is what I think is happening in terms of trends and patterns:

1. Industry-Led Progress

Industry actors now invest the most in model development, data pipelines, and infrastructure. This is shifting AI’s center of gravity from universities to tech giants:

“The three largest language models last year came from Google, OpenAI, and Anthropic. None came from universities.” — Turing Post

This shift may diminish open research and constrain scientific reproducibility.

2. Scaling Laws Still Work—but at a Price

Scaling laws—the principle that bigger models and more data reliably improve performance—remain valid. But they’re hitting diminishing returns:

“The cost to train frontier models is now doubling faster than Moore’s Law can keep up.” — AJS AI

3. Geopolitical AI Investment

China leads in number of AI patents and total publications. However, the U.S. still leads in high-quality, impactful work—especially large-scale language models.

4. Rapid Integration into Science and Workflows

AI tools are being used in drug discovery, materials science, and law. They’re accelerating research cycles:

“AI is becoming a lab partner, not just a paper author.”

5. Governance Struggles to Keep Pace

Despite rapid AI capability growth, global regulation remains fragmented. Only the EU's AI Act offers a comprehensive legal framework—others are piecemeal or voluntary.

Current Challenges and Limitations:

Compute centralization: Training powerful AI models requires access to rare, expensive compute clusters—often controlled by just a few firms.

Benchmark saturation: Models now "solve" benchmarks without necessarily demonstrating robust real-world reasoning or truthfulness.

Opacity and reproducibility: Many new state-of-the-art models (e.g. GPT-4, Gemini) are closed source and unreproducible, raising concerns for transparency.

Safety vs. Speed Tradeoffs: Most firms invest more in scaling than safety testing, despite public concerns and political scrutiny.

What’s Next?

Open-weights models may re-emerge as viable alternatives to closed LLMs, especially for academic and regional use.

Agentic workflows (LLMs acting autonomously or cooperatively) will drive the next productivity leap—but require new evaluation standards.

AI policy coordination between U.S., EU, and Asia is essential to mitigate asymmetric deployment risks and race dynamics.

AI for science will grow: Tools like AlphaFold, ESMFold, and language models for chemistry will drive breakthroughs in medicine and materials.

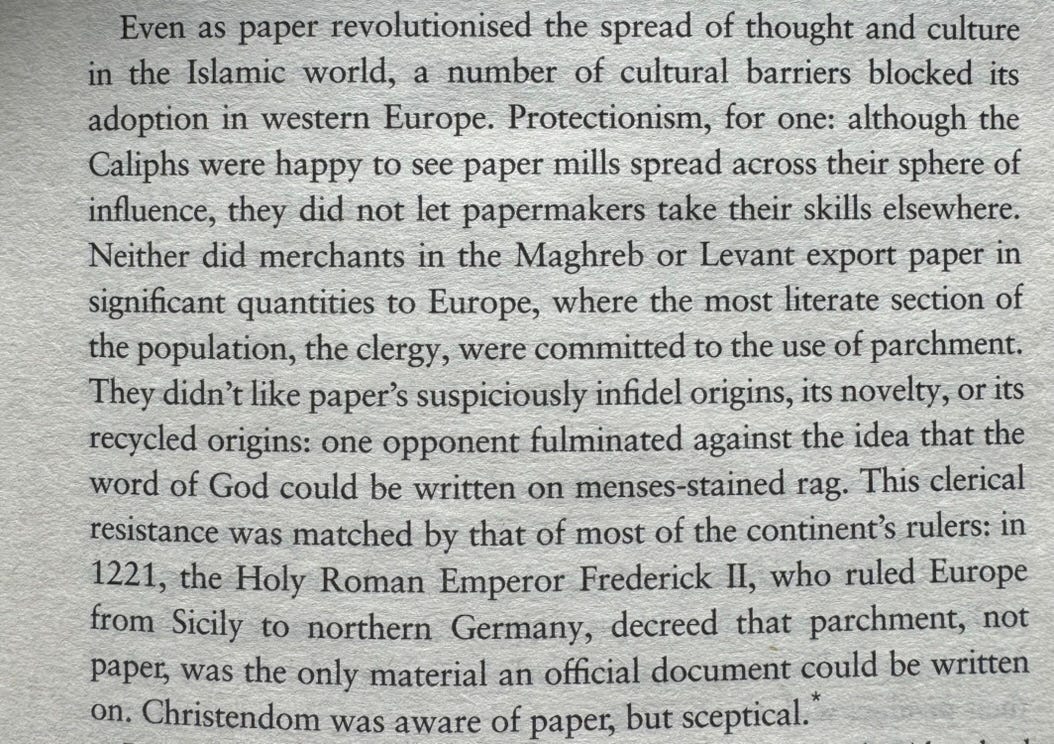

A History of Thinking on Paper

While we are on the topic of regulation, here’s a nice parallel from the XIII century. From a great book The Notebook: A History of Thinking on Paper I’m reading:

History does rhyme.

Keep reading with a 7-day free trial

Subscribe to Bartek Pucek to keep reading this post and get 7 days of free access to the full post archives.