The State of AI, How to Live a Good Life, and How to Design a Great Pricing Page

Few things for a long time.

Good morning

Today’s edition is mostly about AI, but from many different angles. Enjoy.

In today's edition, among other things:

The State of AI 2024

AI in Organizations

How to Live a Good Life

Unlocking AI in GTM

The Emerging Startup Playbook

The DNA of a Great Pricing Page

Onwards!

The State of AI 2024

For the past 7 (!) years, every year, I’ve been covering the State of AI report. Here’s what you need to know (sharing my notes and will probably re-read the report a few more times):

Let’s go a bit deeper, as the report is a must-read for everyone:

Model Performance Convergence

The report highlights a significant convergence in performance between frontier AI labs, especially with open-source models achieving parity with proprietary ones.

Llama 3.1 405B: The open-source model Llama 3.1 has reached a performance level that competes with leading proprietary models such as GPT-4o and Claude 3.5 Sonnet, demonstrating excellence in areas like reasoning, mathematics, and multilingual capabilities.

Llama 3.2: Meta’s Llama 3.2 takes things further by adding multimodal capabilities, expanding from text-only processing to supporting both text and visual modalities. This development closes the gap between open and proprietary AI, bringing multimodal AI to the open-source community.

The narrowing divide between proprietary and open-source models fosters a more competitive AI development landscape, enabling more players to innovate and contribute. This will be fascinating to watch unfold!

Chinese AI Advancements

Despite significant geopolitical challenges, Chinese AI labs have demonstrated substantial progress in AI capabilities, especially in the face of US sanctions.

Top Performers: Chinese labs such as DeepSeek_AI, 01AI_Yi, and Alibaba_Qwen have ascended community leaderboards, especially in domains like mathematics and coding.

Resourcefulness: To overcome sanctions-related limitations, Chinese researchers have relied on strategies such as utilizing semiconductor stockpiles, leveraging approved hardware, and even resorting to smuggling and cloud-based access. These efforts illustrate the resilience and adaptability of the Chinese AI sector.

This resilience highlights China's determination to maintain competitiveness and demonstrates that international restrictions have not halted progress in the AI sector.

Efficiency Improvements in AI Models

Efficiency has become a crucial area of focus for AI model development, as researchers push to enhance performance while reducing computational demands.

Pruning and Distillation: Techniques such as pruning layers, neurons, and embeddings, as well as model distillation, have led to significant efficiency gains. These methods allow smaller models to achieve similar levels of performance to their larger counterparts.

Text-to-Text and Text-to-Image Gains: Such techniques have been especially effective in optimizing text-to-text and text-to-image models, reducing the training costs and energy requirements.

Expanded Applications of LLMs

Large Language Models (LLMs) are breaking new ground in scientific and industrial applications, pushing beyond traditional language-focused tasks.

De Novo Protein Design: Researchers have utilized LLMs for creating entirely new proteins with specific functions, showing potential for breakthroughs in medical science and biotechnology.

Gene Editing Tools: LLMs are also being applied to develop functional gene editors, showcasing their capacity to assist in complex biological engineering tasks.

I bet we will see unparalleled utility in diverse fields such as genomics, synthetic biology, and beyond. (My to-do for 2025: go deeper into biology)

Hardware and Infrastructure Challenges

NVIDIA continues to dominate AI hardware, being used 11 times more frequently than all other competitors combined in AI research.

Cerebras Systems has emerged as a notable challenger, providing specialized AI hardware solutions that are aiming to compete with NVIDIA’s offerings.

Frontier Lab Performance

One of the key themes of the report is the convergence in performance across different AI research labs, particularly between well-funded proprietary efforts and open-source initiatives.

Closing the Gap: Open-source projects are steadily narrowing the performance gap with major corporate AI labs, indicating a democratization of AI research and development.

Impact on Innovation: This convergence pushes both proprietary and open-source entities to innovate not just in model size or accuracy but also in efficiency, accessibility, and applicability across different domains.

And, you also can’t have nice things in the EU:

It’s still absurd that when you are building a company in the EU, you can’t have access to state-of-the-art AI models sooner than let’s say entrepreneurs in Republic of South Africa.

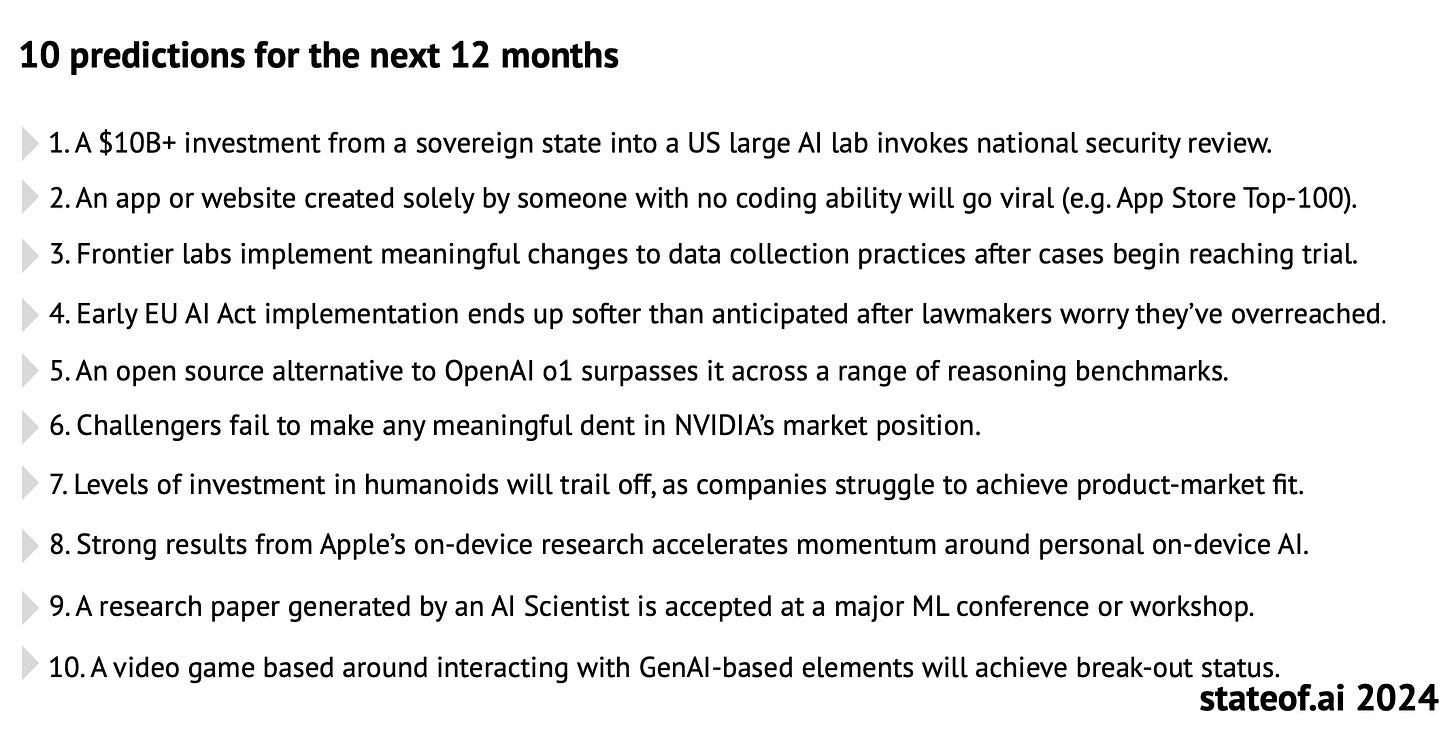

Every State of AI Report ends with predictions, here are some for the upcoming year ahead:

AI in Organizations

The excellent Ethan Mollick highlights a significant disconnect in how AI is perceived at work. Studies show that many employees are using AI to improve productivity—like the 26% productivity gains seen in coding tasks with GitHub Copilot. However, organizational leaders often report little noticeable impact from AI beyond narrow use cases. The answer to this disconnect lies in how individual gains do not always translate into organizational success.

Many employees are secretly using AI at work, but are hesitant to share their use due to several fears:

Keep reading with a 7-day free trial

Subscribe to Bartek Pucek to keep reading this post and get 7 days of free access to the full post archives.